Setup Your Own Chatbot with Generative AI

Most of us are using one or more Generative AI chat bot like Gemini, Chat-GPT , Co-pilot etc.

But a lot of organisation are sceptical on , how secure they are to be used by their employees or they also want to train it on their own data. This can be done by building your own Gen-AI chat bot with available open source data models. These data models are pre-trained and can be trained as well.

In this guide, we'll walk you through to, setup your own Chatbot with Generative AI using Ollama, a user-friendly platform designed for beginners.

What is a Gen-AI Chatbot?

A Gen-AI chatbot leverages the power of large language models (LLMs) to generate human-quality text in response to user queries. Unlike rule-based chatbots that rely on pre-defined answers, Gen-AI chatbots can learn and adapt to new information, offering more natural and engaging conversations.

Ready to Set Up Your On-Premise Gen-AI Chatbot?

While setting up a Gen-AI chatbot might sound complex, this guide will break it down into simple steps. Here's what you'll need:

Prerequisites:

A computer with a decent hardware and GPU (for running the AI model.)

Internet connection (for downloading resources)

Basic understanding of using a command prompt or terminal

Docker installed on your system. You can find installation instructions for various operating systems here: https://docs.docker.com/engine/install/

Step 1: Downloading Ollama

Head over to the Ollama download page: https://ollama.com/download

Download the latest version suitable for your operating system (Windows, macOS, Linux).

Step 2: Installing Ollama

For Windows

- Run the downloaded

.exefile and follow the on-screen instructions.

For MAC/Linux

- Extract the downloaded zip(

Ollama-darwin.zip) file and you will getOllamaapplication file. - Open your terminal.

- Navigate to the directory containing the file.

- Run

chmod +xto make the file executable. - Execute the file with

./.

Note: You might need to run above commands as Super User or as Administrator(for windows)

Step 3: Verifying Ollama Installation

Restart your terminal or command prompt.

Run command

ollama --version.You should be able to see the version of Ollama displayed, if installation was successful.

Choosing Your Data Model:

Now After Ollama installation, our system is ready to run various available LLMs.

Ollama is compatible with various open source LLMs, you can choose any of them as per your need.

Choose llama3, if you are looking for better speed.

You can use gemma, If you need a pre-trained chat-bot.

You can choose a model from Mistral AI, if you are not a fan of Gemma, llama or copilot.

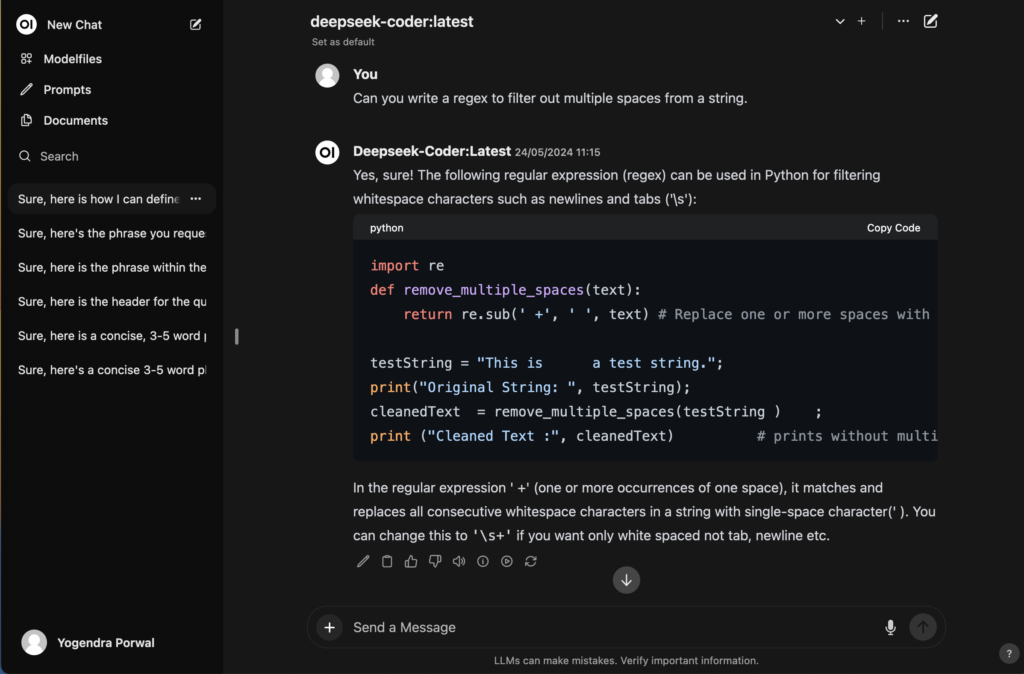

Or if you are specifically looking for a model trained for coding, you can go for deepseek-coder, codegemma or codellama

All of them are capable of learning and adapting to new information, getting trained and fine tuned further specific to your need.

You can also refer to this Comparative Analysis of Leading Large Language Models for more understanding before choosing one. But for beginning you can go for gemma:2b, which is small and compact.

Launching The Model:

Now that you have made your decision, you just need to run below command to download and Launch the model.

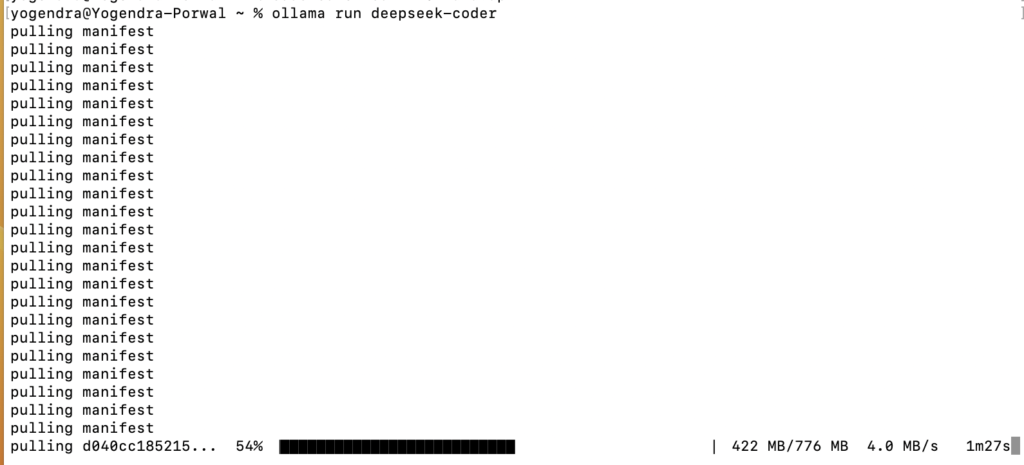

Bash

ollama run deepseek-coder

If the model is not available in your local system , Ollama first download the model. It might take some time depending on the size of model and your internet speed.

Once the download is done, the model will be ready to be used.

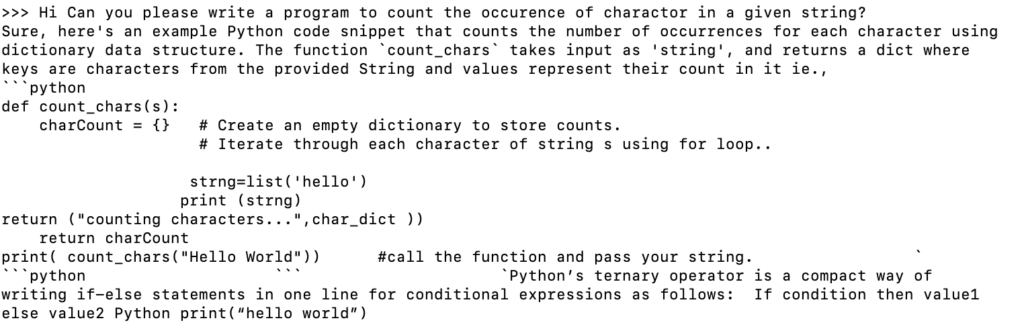

Interacting with Gemma

After successful initialization, You can easily interact with Model by providing your query in terminal.

Setup up Open WebUI with Docker:

Now, You have successfully setup your own chatbot with Generative AI and interacted with LLM on our system, but it still not fill right to do it on terminal. Open WebUI Can provide a user interface that allows you to interact with your Gen-AI model.

Make sure Docker is installed on your system. You can refer to https://docs.docker.com/engine/install/.

When Docker is up and running in your system, Run below command to start a docker container for Open Web UI.

Bash

#If Ollama is on your computer

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Bash

#If Ollama is on a Different Server or a docker container

docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=https://example.com -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

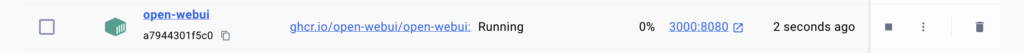

Open your Docker Desktop Application and you see a container started and running.

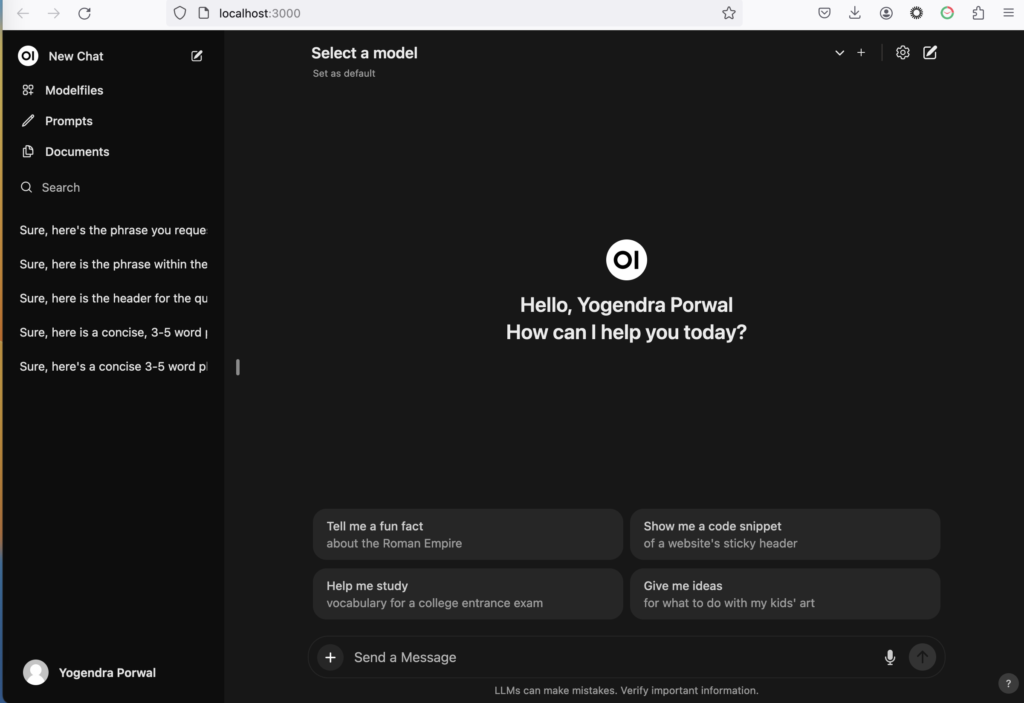

Go to Any browser of your choice and navigate to http://localhost:3000/ and you will see a beautiful user interface to interact with LLMs.

Select your downloaded model from top "Select a Model" dropdown. That's It you can start interacting with this just like any other Gen-AI chatbot like Chat-GPT and Gemini.

Beyond the Basics: Taking Your Chatbot Further

Congratulations! You've successfully setup your own Chatbot with Generative AI. But this is just the beginning. Here are some ways you can further enhance your chatbot's capabilities:

API Integration: Ollama and other Gen-AI platforms often offer APIs that allow you to integrate your chatbot with other tools and services. Imagine a chatbot that can not only answer questions but also connect to your CRM system to access customer data or integrate with a payment gateway to facilitate transactions. The possibilities are endless!

Fine-Tuning: While pre-trained models offer a good starting point, you can fine-tune your chatbot for even better performance. This involves training the model on your own specific data set, allowing it to adapt to your unique needs and domain knowledge.

Training: For advanced users, some platforms allow you to train your own Gen-AI models from scratch. This requires a significant amount of data and computational resources, but it offers the ultimate level of customization for your chatbot.

Conclusion

By following these steps and exploring the additional functionalities offered by Ollama and other Gen-AI platforms, you can unlock the power of generative AI and build a truly intelligent and interactive chatbot. Remember, this is just the beginning of your journey!

To Read more such informative articles visit Stale Element.